On Balance: Evaluating Security Projects Using Benefit-Cost, Risk, and Decision Analysis

Applying benefit-cost analysis to homeland security regulations and related applications is difficult, in part due to issues in measuring security or risk avoided (Roberts, 2019; Farrow and Shapiro, 2009). The Office of University Programs within the Department of Homeland Security (DHS) asked our team of benefit-cost, decision, and risk analysts to evaluate changes in their security practices based on DHS funded research and development (R&D) projects over the last 15 years. Initial results have been published in von Winterfeldt, et al. (2019) with additional submissions planned for peer reviewed journals.

Applying benefit-cost analysis to homeland security regulations and related applications is difficult, in part due to issues in measuring security or risk avoided (Roberts, 2019; Farrow and Shapiro, 2009). The Office of University Programs within the Department of Homeland Security (DHS) asked our team of benefit-cost, decision, and risk analysts to evaluate changes in their security practices based on DHS funded research and development (R&D) projects over the last 15 years. Initial results have been published in von Winterfeldt, et al. (2019) with additional submissions planned for peer reviewed journals.

While DHS sought a monetized benefit-cost analysis based on case studies, early scoping of the project left the way open for multi-criteria decision analysis, cost-effectiveness, and qualitative analysis. Our team developed new ways to modify and implement standard models and, somewhat to our surprise, found that benefit-cost analyses were possible in almost all cases. Along the way, we learned a lot about the power of basic benefit-cost analysis, but also learned lessons about baselines, context, and using sparse data with sensitivity and uncertainty analysis.

The task was to analyze case studies—out of several hundred potential candidates—that DHS agencies believed to have provided the highest net value; these were identified using an iterative process with the agencies. Our team conducted 19 case studies of actual or potential security improvements that were of interest to the US Coast Guard, Transportation Security Administration (TSA), and Customs and Border Protection (CBP). The cases with retrospective implementation tended to have better administrative data on costs, changes in behavior and outcomes while the prospective cases had more model based assumptions.

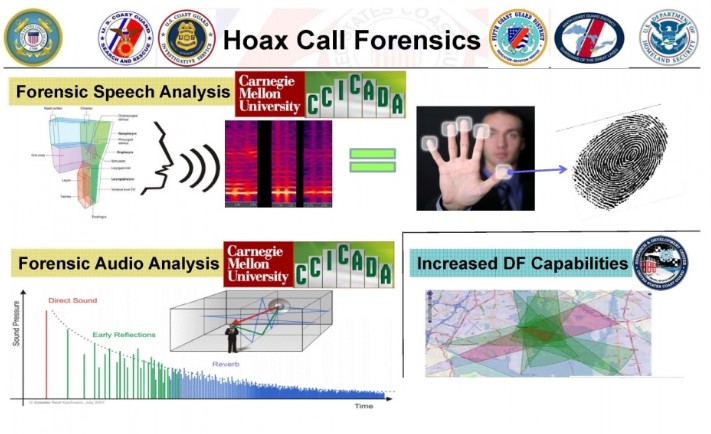

Examples of cases studied included new ways to assign security patrols taking into account game theoretic responses and randomness, new airport scanning technologies, new decision support tools such as aids in identifying hoax calls for rescue, and so on. Each study required obtaining data on R&D and implementation cost, developing a benefit model, and carrying out the net present value analysis—all without use of classified data.

As expected, developing case-specific benefit models and data proved challenging, but we were able to adapt and apply a few common and some less common benefit models, such as those for technological change, value of information, and false negative/false positive test screening.

"Case Study: Coast Guard Patrols Changed Using New Tools"

Photo Credit: DVIDS, US Coast Guard Petty Officer 3rd Class Steven Strohmaier

Among the lessons learned while completing the case studies are:

- Context and data: Getting access to experts in the US Coast Guard, TSA, and CBP was critical both to identify an appropriate model and to inform data gathering. Although we were authorized access to agency personnel, we often found it challenging to reconstruct baseline information if that was not already in the administrative record.

- A non-random sample of cases can be appropriate: Benefit-cost theory recommends that funds from a fixed budget be invested in projects with positive net benefit and then in descending order of net benefit per dollar (Bellinger, 2018). Retrospectively evaluating decisions can be informed by non-random sampling related to that order as we learned.

- A few benefit models spanned most of the applications: Technological change, cost-effectiveness, value of inputs, testing (false negative/false positive), and value of information were among our most frequently used models.

"Case Study: Technology to Identify and Reduce Hoax Rescue Calls to the Coast Guard"

Photo Credit: DVIDS, US Coast Guard Lt. Charles Clark

- Theory and sparse data: Basic theory often helped make the most of limited data at the expense of assuming rationality. For instance, assumed linearity in marginal costs between optimal points led to measurable Harberger-type triangles for the increased net benefits of security which didn’t require measuring security but instead the elicitation of expenditures for common points, a new approach in our experience.

- Incorporating risk and uncertainty: This lesson took two forms. First, risk (or risk avoided) should be included as part of the conceptual model (we defined risk as probability times consequence). Second, uncertainty was pervasive so we employed extensive Monte Carlo and stress testing (Tornado diagram). For instance, this approach clarifies arguments about whether returns could have been negative under some scenarios even if most scenarios lead to positive net benefits.

Intending to sample R&D security projects with high present value of net benefits, we found that a few projects originally thought to be winners had meaningful probabilities of a negative net present value or only moderately positive net benefits. A few projects, however, had very high net benefits. Aggregated across 19 research projects funded by the Office of University Programs at the Science and Technology Directorate, the evidence-based result was a positive net present value exceeding the entire academic research budget expended by DHS to support the homeland security effort.

While DHS clearly hoped to build evidence of the effectiveness of R&D expenditures which in fact occurred, within DHS the cases and methods generated interest within the line agencies, led to DHS interaction with the Office of Management and Budget, and are building support for retrospective benefit-cost analysis.

Acknowledgement and Disclaimer

This research was supported by the United States Department of Homeland Security through the National Center for Risk and Economic Analysis of Terrorism Events (CREATE) under Basic Ordering Agreement HSHQDC-17-A-B004. However, any opinions, findings, conclusions, or recommendations in this document are those of the authors and do not necessarily reflect the views of the United States Department of Homeland Security or the University of Southern California.

References

Bellinger, W. (2018). Decision Rules, in Farrow, S., ed., Teaching Benefit-Cost Analysis: Tools of the Trade, Edward Elgar, Cheltenham.

Farrow, S. and S. Shapiro (2009). The Benefit-Cost Analysis of Homeland Security Expenditures, J. of Homeland Security and Emergency Management, 6(1):1-20.

Roberts, F. (2019). From Football to Oil Rigs: Risk Assessment for Combined Cyber and Physical Attacks. Journal of Benefit-Cost Analysis, 10(2):251-273.

Von Winterfeldt, D., S. Farrow, R. John, J. Eyer, A. Rose and H. Rosoff (2019). The Value of Homeland Security Research, Risk Analysis (early view).